One secret ingredient driving the future of a 3D technological world is a computational problem called SLAM. Simultaneous Localisation and Mapping (SLAM) is a series of complex computations and algorithms which use sensor data to construct a map of an unknown environment while using it at the same time to identify where it is located. SLAM algorithms combine data from sensors to determine the position of each sensor OR process data received from it and build a map of the surrounding environment.

SLAM is becoming an increasingly important topic within the computer vision community and is receiving particular interest from the industries including augmented and virtual reality.

This article will give a brief introduction to what SLAM, what it’s for, and why it’s important, in the context of computer vision research and development, and augmented reality.

What is SLAM?

A set of algorithms working to solve the simultaneous localization and mapping problem. SLAM can be implemented in many ways. First of all, there is a huge amount of different hardware that can be used. Secondly, SLAM is more like a concept than a single algorithm. There are many steps involved in SLAM and these different steps can be implemented using a number of different algorithms

The core technology enabling these applications is Simultaneous Localization And Mapping (SLAM), which constructs the map of an unknown environment while simultaneously keeping track of the location of the agent

- In VR, users would like to interact with objects in the virtual environment without using external controllers.

- In AR, the object being rendered needs to fit in the real-life 3D environment, especially when the user moves.

- Even more importantly, in autonomous vehicles, such as drones, the vehicle must find out its location in a 3D environment.

There are many types of SLAM techniques as per the implementation and use:

EKF SLAM, FastSLAM, Graph-based SLAM, Topological SLAM and much more. But here I am going to divide it only 2 parts and out of which Visual SLAM is more interesting in AR/VR/MR point of view.

- Visual SLAM: monocular, stereo or depth cameras are the primary sensor-type.

- Sensor Fusion: fuse information from multiple sensors into a single solution

Visual SLAM:

- Visual SLAM is currently very well suited for tracking in unknown environments, rooms, spaces, and 3D models or real-world objects where the primary mode of sensing is via a camera – since it is of most interest in the context of augmented reality, but many of the themes discussed can apply more generally.

- The requirement of recovering both the camera’s position and the map, when neither is known, to begin with, distinguishes the SLAM problem from other tasks. For example,

- marker-based tracking (e.g.Viforia or Kudan’s Tracker) is not SLAM, because the marker image (analogous to the map) is known beforehand.

- 3D reconstruction with a fixed camera rig is not SLAM either because while the map (here the model of the object) is being recovered, the positions of the cameras are already known.

- The challenge in SLAM is to recover both camera pose and map structure while initially knowing neither.

SLAM is hard, because:

- A map is needed for localization and good pose estimate is needed for mapping and

- A good pose estimate is needed for mapping.

- Localization: inferring location given a map

- Mapping: inferring a map given locations

- SLAM: learning a map and locating the 3D model/Robot/Object simultaneously

How SLAM Works?

SLAM is a chicken-or-egg problem:

- A map is needed for localization and

- pose estimate is needed for mapping

SLAM is similar to a person trying to find his or her way around an unknown place. First, the person looks around to find familiar markers or signs. Once the person recognizes a familiar landmark, he/she can figure out where they are in relation to it. If the person does not recognize landmarks, he or she will be labeled as lost. However, the more that person observes the environment, the more landmarks the person will recognize and begin to build a mental image, or map, of that place.

SLAM is the problem of constructing or updating a map of an unknown environment while simultaneously keeping track of an agent’s location within it.

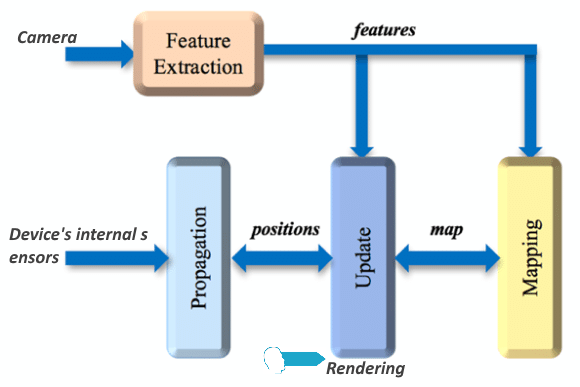

Above blog diagram shows a simplified version of the general SLAM pipeline which operates as follows:

- The Internal sensors or called Inertial Measurement Unit ( IMU) consists of a gyroscope and other modern sensors to measure angular velocity and accelerometers to measure acceleration in the three axes and user movement.

- The main task of the Propagation Unit is to integrate the IMU data points and produce a new position. However, since the IMU hardware usually has bias and inaccuracies, we cannot fully rely on Propagation data.

- To correct the drift problem, we use a camera to capture frames along the path at a fixed rate, usually at 60 FPS. Modern devices have special depth-sensing camera.

- The frames captured by the camera can be fed to the Feature Extraction Unit, which extracts useful corner features and generates a descriptor for each feature.

- The features extracted can then be fed to the Mapping Unit to extend the map as the Agent explores.

- Also, the features detected would be sent to the Update Unit which compares the features to the map. If the detected features already exist in the map, the Update unit can then derive the agent’s current position from the known map points.

- By using this new position, the Update Unit can correct the drift introduced by the Propagation Unit. Also, the Update unit updates the map with the newly detected feature points.

Development Opportunities and Solutions?

SLAM has become very popular because it can rely only on a standard camera and basic inbuilt mobile sensors. This makes SLAM systems very appealing, both as an area of research and as a key enabling technology for applications such as augmented reality.

- In an effort to democratize the development of simultaneous localization and mapping (SLAM) technology, Google has open-sourced its Cartographer library for mapping environments in both 2D and 3D.

- Cartographer official blog, a real-time simultaneous localization, and mapping (SLAM) library in 2D and 3D with ROS support.

- Facebook’s annual F8 conference has delivered a big slam on augmented reality (AR) and virtual reality (VR) push. Facebook founder Mark Zuckerberg said: “We’re making the camera the first AR platform”. Behind all the hype is a core tech known as SLAM.

Qualcomm Research: Enabling AR in unknown environments

Qualcomm Research’s computer vision efforts are focused on developing novel technology to ‘Enable augmented reality (AR) experiences in unknown environments’. A critical step in enabling such experiences involves tracking the camera pose with respect to the scene.

- In target-based AR, a known object in the scene is used to compute the camera pose in relation to it. The known object is most commonly a planar object, however, it can also be a 3D object whose model of geometry and appearance is available to the AR application.

- Tracking the camera pose in unknown environments can be a challenge. In order to mitigate this challenge, there is a leading technology known as SLAM, which enables AR experiences on mobile devices in unknown environments.

Qualcomm Research has designed and demonstrated novel techniques for modeling an unknown scene in 3D and using the model to track the pose of the camera with respect to the scene. This technology is a keyframe-based SLAM solution that assists with building room-sized 3D models of a particular scene

Applications of SLAM:

SLAM is a key driver behind unmanned vehicles and drones, self-driving cars, robotics, and augmented reality applications. SLAM is central to a range of indoor, outdoor, in-air and underwater applications for both manned and autonomous.

- Swedish company 13th Lab, who developed an SDK called Pointcloud, has been purchased by Facebook Inc. They plan to use their knowledge in the Oculus Rift division.

- German AR company Metaio was purchased by Apple Inc, shortly after announcing a SLAM capability in their SDK. As per my knowledge, Metaio is a technical base for Apple ARKit.

The Number of important tasks such as tracking, augmented reality, map reconstruction, interactions between real and virtual objects, object tracking and 3D modeling can all be accomplished using a SLAM system, and the availability of such technology will lead to further developments and increased sophistication in augmented reality applications.

Hey Sanket, I wish to use slam in an android app, can you please guide me as to which sdk should I use for this purpose.